Android: Retrieving the Camera preview as a Pixel Array

Posted by Dimitri | Mar 24th, 2011 | Filed under Featured, Programming

This post explains how to take the live images created by Android’s camera preview feature and return them as a RGB array, that can be used to all sorts of things, like custom effects preview and real-time image filtering. This post used the CameraPreview class that already comes bundled with Android’s SDK because it has everything already set-up, that way is just a matter of inserting more code. The techniques shown here works with Android 2.1 and should work with versions 1.6 and 1.5.

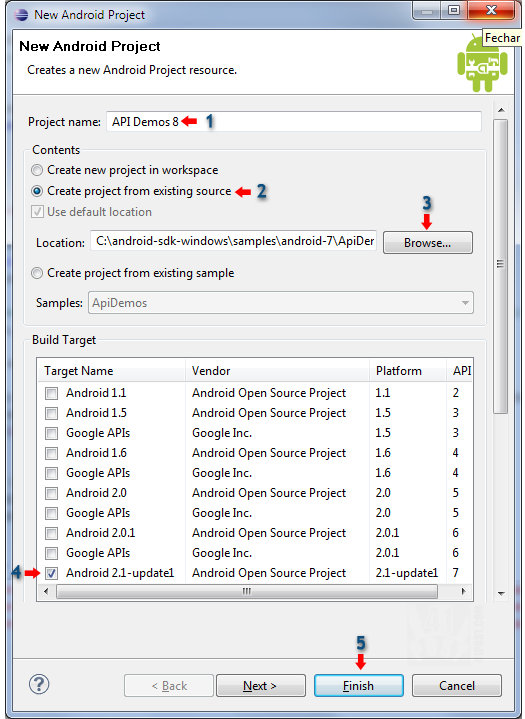

The first thing to do is to import the API Demos project to your Workspace. To do that, in Eclipse, click File -> New -> Android Project. In the dialog that has just opened, give the project a name at the first field (like API Demos 8) and select ‘Create project from existing source’. Now browse to <Android SDK folder>\samples\android-7\ApiDemos. Finally, mark Android 2.1 as the Build Target and click Finish:

This image explains the above process.

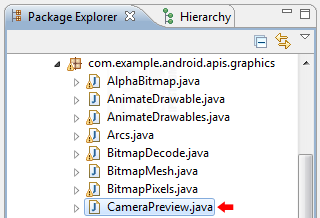

After that, find the CameraPreview.java file that is located at: com.example.android.apis.graphics package.

The code from this class is going to be changed.

This is the file the changes are going to be made to. Inside it, you will find two classes: CameraPreview and Preview. The changes are going to be made to the latter. These alterations will be used to add a callback that runs code before rendering each frame captured by the camera. Android has already a interface for that, inside the Camera namespace, called PreviewCallBack, and that’s what it’s going to be used. Then, an array to store each frame pixels as RGB values will be initialized, and for that, it is necessary to know the preview width and height. For the last part, a method is added to convert each frame value in the array to RGB.

Here’s the code with all the changes:

package com.example.android.apis.graphics;

//Omitted package imports

//...

import android.hardware.Camera.*;

//Omitted CameraPreview Activity

//...

class Preview extends SurfaceView implements SurfaceHolder.Callback, PreviewCallback {

SurfaceHolder mHolder;

Camera mCamera;

//This variable is responsible for getting and setting the camera settings

private Parameters parameters;

//this variable stores the camera preview size

private Size previewSize;

//this array stores the pixels as hexadecimal pairs

private int[] pixels;

Preview(Context context) {

super(context);

// Install a SurfaceHolder.Callback so we get notified when the

// underlying surface is created and destroyed.

mHolder = getHolder();

mHolder.addCallback(this);

mHolder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

}

public void surfaceCreated(SurfaceHolder holder) {

// The Surface has been created, acquire the camera and tell it where

// to draw.

mCamera = Camera.open();

try {

mCamera.setPreviewDisplay(holder);

//sets the camera callback to be the one defined in this class

mCamera.setPreviewCallback(this);

///initialize the variables

parameters = mCamera.getParameters();

previewSize = parameters.getPreviewSize();

pixels = new int[previewSize.width * previewSize.height];

} catch (IOException exception) {

mCamera.release();

mCamera = null;

// TODO: add more exception handling logic here

}

}

public void surfaceDestroyed(SurfaceHolder holder) {

// Surface will be destroyed when we return, so stop the preview.

// Because the CameraDevice object is not a shared resource, it's very

// important to release it when the activity is paused.

mCamera.stopPreview();

mCamera.release();

mCamera = null;

}

public void surfaceChanged(SurfaceHolder holder, int format, int w, int h) {

// Now that the size is known, set up the camera parameters and begin

// the preview.

parameters.setPreviewSize(w, h);

//set the camera's settings

mCamera.setParameters(parameters);

mCamera.startPreview();

}

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

//transforms NV21 pixel data into RGB pixels

decodeYUV420SP(pixels, data, previewSize.width, previewSize.height);

//Outuput the value of the top left pixel in the preview to LogCat

Log.i("Pixels", "The top right pixel has the following RGB (hexadecimal) values:"

+Integer.toHexString(pixels[0]));

}

//Method from Ketai project! Not mine! See below...

void decodeYUV420SP(int[] rgb, byte[] yuv420sp, int width, int height) {

final int frameSize = width * height;

for (int j = 0, yp = 0; j < height; j++) { int uvp = frameSize + (j >> 1) * width, u = 0, v = 0;

for (int i = 0; i < width; i++, yp++) {

int y = (0xff & ((int) yuv420sp[yp])) - 16;

if (y < 0)

y = 0;

if ((i & 1) == 0) {

v = (0xff & yuv420sp[uvp++]) - 128;

u = (0xff & yuv420sp[uvp++]) - 128;

}

int y1192 = 1192 * y;

int r = (y1192 + 1634 * v);

int g = (y1192 - 833 * v - 400 * u);

int b = (y1192 + 2066 * u);

if (r < 0) r = 0; else if (r > 262143)

r = 262143;

if (g < 0) g = 0; else if (g > 262143)

g = 262143;

if (b < 0) b = 0; else if (b > 262143)

b = 262143;

rgb[yp] = 0xff000000 | ((r << 6) & 0xff0000) | ((g >> 2) & 0xff00) | ((b >> 10) & 0xff);

}

}

}

}

The first required alteration is to add the android.hardware.Camera.*; at the beginning of the code. Then, declare that we are also implementing the PreviewCallback (line 10). Right after that, three variables are created: the array that is going to be used to store the pixels as RGB values and the other two are going to be used to get the camera’s preview size and initialize the array (lines 16 to 20). Then, the camera object is initialized at line 35, and by doing so, an handle to the camera hardware is created. As observed by Tobias, if you are using Android 2.3, you will need to replace line 35 for this block of code.

At line 40, the camera mCamera preview callback is set to the one declared on this class. It is important to add this line after setting the preview display. Trying to set the callback before that will throw an exception. Still inside the same try block, all newly added variables are initialized. Note that this had to be done to make the pixels array have the same number of elements as the preview image (lines 43,44 and 45).

Since the a Parameters class object has already been initialized, there is no need to initialize it as a local variable inside the surfaceChanged() method meaning that it is now possible to remove this line: Camera.Parameters parameters = mCamera.getParameters();

Then, at the end of this class, two methods have been added: onPreviewFrame() and decodeYUV420SP(). The first one is going to define what the callback should do before rendering a preview frame to the screen. So, it’s calling the decodeYUV420SP() method to transform the data byte[] array into a RGB array (line 75). Also, this callback is printing the RGB value of the top left screen to LogCat.

The decodeYUV420SP() isn’t mine! It was copied from the awesome Ketai Project, which aims to bring the Processing framework to Android. It can be found here. This method is what makes it all possible because, without it, we would have images on the NV21 format, which is Android’s default format for the camera preview.

The method returns the passed NV21 array as a RGB array in a hexadecimal format. Each pair corresponds to the Red, Green and Blue channels. The first pair is always FF (255) (alpha?), the second corresponds to the red channel, the third to the blue, and the fourth one to the green. E.g.: ffff0000 is red (255,0,0), without any green or blues, while ff00ff00 (0,255,0) is green and ffffff00 (255,255,0) is yellow and so on.

And that’s it!

Thanks to Eric Pavey for the Android Adventures post series, that points out where to find the NV21 to RGB conversion method.

hello.

image is two dimentional array, right?

in the source code :

Log.i(“Pixels”, “The top right pixel has the following RGow i can take B (hexadecimal) values:”

+Integer.toHexString(pixels[0]));

so, how i can get the othe two dimensional pixel, such as pixel at (20,2)?

thx for information. :)

It depends on the size of the preview, since it’s returned into a single dimension array as a whole.

For example, if the preview is set to 320×240 the pixel at (20,2) (the twenty first pixel from the third line) would correspond to the 660th element of the pixel array, because:

320*2 + 20 = 660

it works great.

but can i ask some question again?

i try to create bitmap fromthat array. i’s using thins code :

Bitmap bmp = Bitmap.createBitmap(pixels, previewSize.width, previewSize.height, Config.ARGB_8888);

ehen i want to display it in imageview it has null pointer exception.

can you help me ?

thx 4 all :)

That’s strange. Are you creating the Bitmap using the pixels array after the decodeYUV420SP() method call? If you are doing so, it should have worked without any problems.

thx it work, :)

Let me start off by saying this is awesome and just what I was looking for! Thanks!

I have a question though. Let’s say I wanted to display pixels[0] on the top right of the screen? I’ve tried using a textview to no avail. Does anyone have an idea?

Cheers,

Albert

Thanks!

As for your question, maybe you could:

– Use a ImageView

– Create a Bitmap initializing it with this constructor.

– Call the setImageBitmap() of the Bitmap object you have created in the previous item.

Was that your question? Or you want to display the pixels[0] value as text?

Thats nice, but how do I use it?

I tried starting the activity from my project, but I cant get it to run.

This is just an example. It’s not meant to be used as a regular application. The value of the first pixel is printed at LogCat’s console, and that’s all it does.

Got it working. The samples for Android 2.3.3 look a bit different.

To avoid a crash of your sample change:

mCamera = Camera.open();

to

if (mCamera == null)

{

mCamera = Camera.open();

}

Thanks for the info! I have added a link to your comment in the post, just in case someone is experiencing a similar problem.

These type of posts are truly helpful for devs they can learn a lot from it. Such posts are commonly available but no one clears these step in such detailed manner. This helps a lot to develop quality apps.

How to show the new image precessed?

I have the image frame in grayscale but I don’t know show it.

Can you help me?

I guess you can take the pixels array and pass it as a parameter to the decodeArray() static method, defined at the BitmapFactory class. Probably, something like:

BitmapFactory.decodeByteArray(pixels,0,pixels.length)Nice Article thanks a lot!!!!!!!!!!!!!

I am facing one problem when i run this code on emulator(Android 2.3.3) it works fine

but when i run it on device then i am getting ArrayindexOutofBound Exception in decodeYUV() function can you help why its raising this exception.

Hi , i have the same problem , did u manage to get it fixed

Thanks

Hi, very nice article.

However, i have a problem when executing this code in my device.

First of all, the device took some time before the preview can be seen in the screen, then an error prompt out force the program to quit.

I wonder is it the time took for the setup cause the program to crash?

I hope to receive some idea how to solve this problem.

Thank you

Hi, i tried on checking the size of the byte[] data on the onPreviewFrame, i found out that the size of the data is only 8 for each frame, why is that so? any idea?

That’s weird. Only 8 bytes for each frame?

Maybe, you could check if your preview is working properly. If it is, you need to check if it have the same dimensions defined by the previewSize.

Some devices are really problematic when it comes to accessing the camera. I really can’t remember, but I think you can find a list of then at the the Ketai Project page. Another place to check for this type of information is Google Groups.

Hi, thanks for the replied.

I checked the previewSize dimension, it is (800,480).

I tried to find the available preview size using getSupportedPreviewSize, come out with a “null” output.

Now I really wonder is the development kit problem instead of the coding problem.

Any other options?

Thanks

hi, this is not working :( I have made exactly following your tutorial, though after launching the application on the phone (2.1) after 2-3 seconds it stops and says “application has unexpectedly stopped, force close”. when trying to run it on an emulator (2.1) then the preview is deformed (declivous) and after pressing the “back” button it stops saying “application has unexpectedly stopped, force close”

any cluse how to fix it?

I tried to use the code exactly as stated but it closes unexpectedly.I added Camera in Uses permission but when i open the app,it shows a blank screen.I am completely new to Android and trying to learn.I have been trying to work on this but no luck.can anyone help!!

how about the size of image.

can we make it smaller pixel? such as we want make 240 x 180 pixel?

because when we proccess decodeYUV420SP() with high resoolution camera it can be low performance.

any idea ? thnx before for all, it was great. :)

Maybe, you can pass the preview size manually at line 66. Like this:

parameters.setPreviewSize(240, 180);i tried that but not working, until i try to check supported preview size for my device.

try use parameters.getSupportedPreviewSizes().

it will display our device support for preview frame. :)

Cool, thanks for sharing!

Thanks for the tutorial so helpful!

Now that I have the rgb array and am able to make bitmaps etc,

Can anyone link me to where I can learn to apply some different video effects to this array?

Thank you for your sharing! However I had one problem with this:

A error occurred at onPreviewFrame(), saying that it must override a superclass method. Would someone please shed some light on it? Thanks

Hi,

I have a very specific requirement for an android app, which is lucky as I am a real beginner.. but all I really need is to be able to actually make the above code work, in 2.3.3 on a samsung galaxy s2… however I have now spent days trying to combine the above, as described, with the cameraPreview example in apidemos, with no luck. If anybody has got it to work and would be so generous as to forward me their app I would be most grateful…

Richard

You really are my saviour. I have been looking for a way to actually do something useful with that preview-stream without having to rely on android to paint it on the screen for me.

Thank you so, so much.

hi this chandu i am working with same but cna u please tell me how to convert those rgb pixes into gray scale

please respond to my answer

Respected Sir,

I have samsung galaxy ace gt-s5830. The above code as well as the code in the sdk hangs.

Even code which works on other android devices(seen from online demos) fails on my mobile.

However I did manage to run a simple calculator program by myself. Please tell me in which mobile your code works, i.e. what is the brand name and model of your mobile on which you run the above program.

How to save the output of it. PLease dont mind i am very ne to this. My code is executing but i dont know how to save.

Also please anyone can give me the complete working code that would be very helpful.

How to get rotated image from decodeYUV420SP, it must be faster than creating a bitmap and then rotating it

Hi

I was wonder how can i display modified data on preview real time ?

I’m looking for a way to take some effect real time using camera preview.

thanks